CORGi @ CMU

Computer Organization Research Group led by Prof. Nathan Beckmann

Navigate to

Smart Caching at Datacenter Scale

Datacenter applications at companies like Google, Facebook, Microsoft, etc serve enormous workloads consisting of petabytes of data. Caching plays a critical part in serving these workloads at high throughput and low cost, but datacenters’ massive scale introduces many new challenges for caching systems. To keep cost low, datacenter caches must combine different technologies (DRAM, NVM, flash) with different cost and performance, as well as other unique properties like limited write-endurance in flash and NVM.

This project aims to build smart, high-performance, and low-cost caching systems for datacenter applications. We are focused on both theory and practice. On the theory side, we extend and apply caching theory to address the new challenges at datacenter scale. On the practical side, we are designing and implementing caching systems that combine lessons from theory with engineering insights to make the best use of diverse technologies.

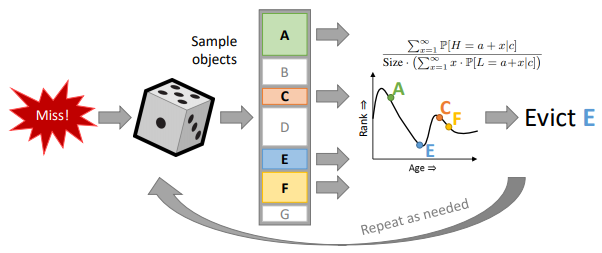

The above image sketches how LHD (NSDI’18) implements a theoretically-grounded eviction policy based on Bayesian inference in a design inspired by recent, high-associativity cache designs for processors based on statistical sampling. We are currently building caching systems that make efficient use of flash-based SSDs for very small objects, and co-designing caches with the backing storage system to optimize end-to-end cost across the datacenter.

Checkout our frequent collaborators on the PDL caching project.

CORGi Members

Publications

FairyWREN: A Sustainable Cache for Emerging Write-Read-Erase Flash Interfaces [pdf]

Sara McAllister, Sherry (Yucong) Wang, Benjamin Berg, Daniel Berger, George Amvrosiadis, Nathan Beckmann, Gregory R. Ganger. ACM Transactions on Storage 2025.

A Call for Research on Storage Emissions [pdf]

Sara McAllister, Fiodar Kazhamiaka, Daniel Berger, Rodrigo Fonseca, Kali Frost, Aaron Ogus, Maneesh Sah, Ricardo Bianchini, George Amvrosiadis, Nathan Beckmann, Gregory R. Ganger. HotCarbon 2024.

FairyWREN: A Sustainable Cache for Emerging Write-Read-Erase Flash Interfaces [pdf]

Sara McAllister, Sherry (Yucong) Wang, Benjamin Berg, Daniel Berger, George Amvrosiadis, Nathan Beckmann, Gregory R. Ganger. OSDI 2024.

Baleen: ML Admission & Prefetching for Flash Caches [pdf]

Daniel Lin-Kit Wong, Hao Wu, Carson Molder, Sathya Gunasekar, Jimmy Lu, Snehal Khandkar, Abhinav Sharma, Daniel Berger, Nathan Beckmann, Gregory R. Ganger. FAST 2024.

Brief Announcement: Spatial Locality and Granularity Change in Caching [pdf]

Nathan Beckmann, Phillip Gibbons, Charles McGuffey. SPAA 2022.

Spatial Locality and Granularity Change in Caching [pdf]

Nathan Beckmann, Phillip Gibbons, Charles McGuffey. arXiv 2022.

Kangaroo: Theory and Practice of Caching Billions of Tiny Objects on Flash [pdf]

Sara McAllister, Benjamin Berg, Julian Tutuncu-Macias, Juncheng Yang, Sathya Gunasekar, Jimmy Lu, Daniel Berger, Nathan Beckmann, Gregory R. Ganger. ACM Transactions on Storage 2022.

Kangaroo: Caching Billions of Tiny Objects on Flash [pdf]

Sara McAllister, Benjamin Berg, Julian Tutuncu-Macias, Juncheng Yang, Sathya Gunasekar, Jimmy Lu, Daniel Berger, Nathan Beckmann, Gregory R. Ganger. SOSP 2021.

Brief Announcement: Block-Granularity-Aware Caching [pdf]

Nathan Beckmann, Phillip Gibbons, Charles McGuffey. SPAA 2021.

The CacheLib Caching Engine: Design and Experiences at Scale [pdf]

Benjamin Berg, Daniel Berger, Sara McAllister, Isaac Grosof, Sathya Gunasekar, Jimmy Lu, Michael Uhlar, Jim Carrig, Nathan Beckmann, Mor Harchol-Balter, Gregory R. Ganger. OSDI 2020.

Writeback-Aware Caching [pdf]

Nathan Beckmann, Phillip Gibbons, Bernhard Haeupler, Charles McGuffey. APoCS 2020. (Best Paper.)

Brief Announcement: Writeback-Aware Caching [pdf]

Nathan Beckmann, Phillip Gibbons, Bernhard Haeupler, Charles McGuffey. SPAA 2019.

Practical Bounds on Offline Caching with Variable Object Sizes [pdf]

Daniel Berger, Nathan Beckmann, Mor Harchol-Balter. SIGMETRICS 2018.

LHD: Improving Cache Hit Rate by Maximizing Hit Density [pdf]

Nathan Beckmann, Haoxian Chen, Asaf Cidon. NSDI 2018.

Maximizing Cache Performance Under Uncertainty [pdf]

Nathan Beckmann, Daniel Sanchez. HPCA 2017.

Cache Calculus: Modeling Caches through Differential Equations [pdf]

Nathan Beckmann, Daniel Sanchez. IEEE CAL 2016.

Modeling Cache Performance Beyond LRU [pdf]

Nathan Beckmann, Daniel Sanchez. HPCA 2016.

Talus: A Simple Way to Remove Cliffs in Cache Performance [pdf]

Nathan Beckmann, Daniel Sanchez. HPCA 2015.